[sonaar_audioplayer albums=”243016, 243069, 243091, 243115, 243151, 243173, 243195, 243238, 243291, 243308, 243324, 243335, 243401, 243466, 243537, 243595, 243646, 243710, 243795, 243842, 243885, 243940, 244001, 244026, 244108, 244140, 244161, 244186, 244205, 244240, 244305″ progress_bar_style=”default” wave_bar_width=”1″ wave_bar_gap=”1″ player_layout=”skin_boxed_tracklist” show_track_market=”true”][/sonaar_audioplayer]

Artificial Intelligence is dangerous when manipulated and exploited by threat actors. One such recent example of AI menace is GhostGPT. It’s yet another AI chatbot that was developed with the sole purpose of making it easy and convenient for cybercriminals. This unrestricted generative AI model has garnered immense popularity within days of its launch.

The researchers at Abnormal Security found out about GhostGPT in a Telegram channel back in November 2024. As per their report, Cybercriminals have been actively using GhostGPT to create malware, write phishing messages, and so much more.

This article aims to find out what makes GhostGPT an absolute favorite among cybercriminals and how much damage it can impart to businesses and individuals alike. Let’s get started!

What is GhostGPT?

This is a relatively new, handy AI tool for beginner-level and intermediate cybercriminals. It is the latest addition to the uncensored Gen AI ecosystem. An easy-to-access and convenient-to-use AI chatbot, GhostGPT doesn’t have the traditional restrictions that legitimate platforms such as ChatGPT, Gemini and CoPilot have.

It operates seamlessly without any kind of guardrails, thereby enabling cybercriminals to misuse it for generating harmful outputs. Threat actors can ask anything under the sun to this AI chatbot and get refined answers on topics like malware, phishing scams, hacking strategies and so on.

Abnormal believes that GhostGPT is “a chatbot specifically designed to cater to cyber criminals.” The AI chatbot is actually capable of sharing unfiltered, direct answers to harmful questions and sensitive queries. There’s no system of flagging or blocking at all.

GhostGPT is in no way close to other ethical AI models. Authorities have not yet been able to find out the details about the developer of this rogue GPT.

Easy accessibility making things worse

The worst thing about GhostGPT is its ease of accessibility. The pricing has been done tactfully enough to attract aspiring threat actors. There are three major pricing models for GhostGPT. You can use the uncensored AI chatbot for one week and all you need to pay is just $50.

The pricing is $150 and $300 for 30 days and 90 days of usage, respectively. The quick and apt responses help the cybercriminals amp up their process and save up their time. Any threat actor who is interested in using the Gen AI model can access it through a particular Telegram channel.

There’s literally no barrier to entry because of the minimal pricing structure. Also, cybercriminals do not require any kind of specialized training to access and use GhostGPT. Even less skilled threat actors can easily make the most out of this unrestricted AI chatbot.

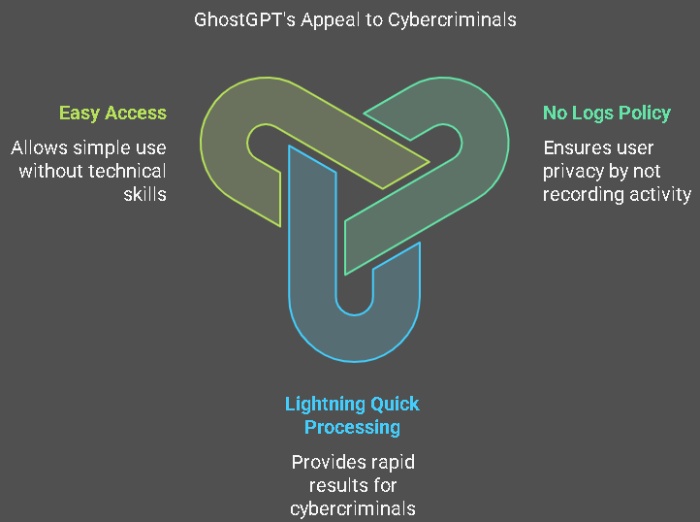

What makes GhostGPT incredibly popular among threat actors?

The developers have worked extensively on this AI chatbot to make it one of the best among its contemporaries. Here are a few features that help it stand out and make it irresistible to cybercriminals:

-

No logs policy

This is perhaps the top feature that makes it an absolute favorite among cybercriminals. GhostGPT does not record user activity. This makes it super convenient for the attackers to carry out their queries and yet be able to conceal their illegitimate activities. GhostGPT gives them a safe haven to share their query, get answers and move on without leaving a trace behind.

-

Lightening quick processing

GhostGPT offers quick results. It saves a great deal of time for cybercriminals by giving them the desired responses within a very short span of time. Generating malicious content has never been so easy, effortless and quick!

-

Easy to access

This is yet another aspect of why GhostGPT is gaining popularity across the cybercrime ecosystem. There is no need to download any LLM library or even use a jailbreak prompt to start using GhostGPT. Even a beginner-level threat actor with zero or minimal skills can make the affordable payment of $50 and use GhostGPT straight for the next 7 days through a dedicated Telegram channel.

The developers have marketed this uncensored Gen AI model as a tool that can be used to enhance cybersecurity. However, experts believe that this is merely a pretense, given that GhostGPT is available on cybercrime forums and is majorly focused on BEC scams.

Rogue chatbots- the latest trend in the cyber ecosystem

GhostGPT is not a one-of-a-kind AI chatbot that comes with no guardrails. It is preceded by other similar Gen AI models such as WormGPT, EscapeGPT, WolfGPT, FraudGPT and so on. All these AI tools were developed with the ultimate goal of monetizing through cybercrime marketplaces.

However, none of them turned out to be as appealing as GhostGPT. They didn’t stand true to their claims. Apart from that, there were multiple entry barriers that dampened the enthusiasm of cybercriminals.

Rogue chatbots are currently one of the biggest concerns for cybersecurity organizations as these tools do not require high-level coding skills. Also, their quick response time enables cybercriminals to plan and execute their attacks more conveniently.

Accuracy level of GhostGPT

Experts decided to test the abilities of GhostGPT. So, the researchers designed a query around the creation of a Docusign phishing email. The result was convincing enough, as GhostGPT generated a decent template. It was flawless, with no grammatical or spelling errors. Also, it generated the result within a fraction of a second.

The best way to combat rogue AI models is by deploying AI-powered defensive cybersecurity mechanisms with robust phishing protection. Combining these technologies under the guidance of skilled professionals can effectively counter uncensored AI chatbots. The concern is that AI models like GhostGPT are strategically designed to bypass security filters, making advanced protection essential.

Their design allows them to ditch traditional security mechanisms. This is why it is important to build a solid cybersecurity system backed by defensive AI. The focus should be on identifying any kind of anomalies as well as anticipating and neutralizing any kind of threat attacks before the real damage is done. In short, brands and individuals need to pull up their socks and be completely prepared to prevent and fight any kind of cyberattack.