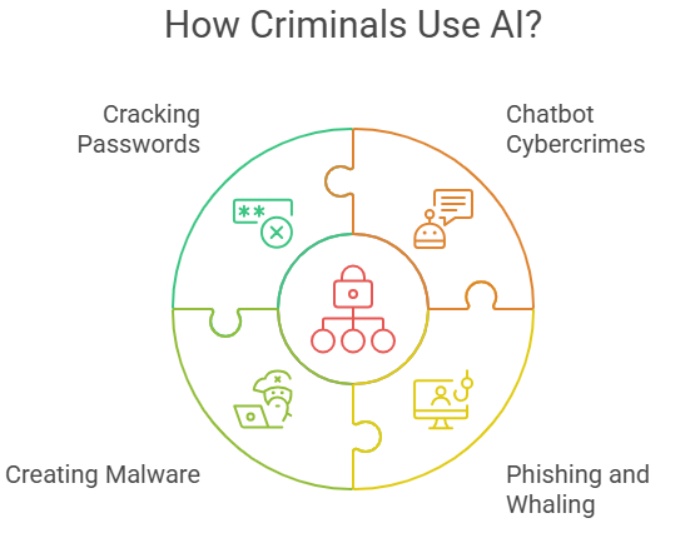

With the inception of generative AI tools, the cybersecurity landscape is witnessing an alarming trend. Threat actors are leveraging the power of AI chatbots for malicious purposes, further streamlining malware attack mechanisms.

Online adversaries have already produced the infamous WormGPT, a clone of ChatGPT trained on malware data. Also, a new malicious tool called FraudGPT raises concerns among cybersecurity experts. A threat actor known as CanadianKingpin12 developed this tool and customized it for malicious actors, fraudsters, and spammers. That empowers them to launch devastating online attacks.

The latest among the AI-based malicious digital weapons are DarkBART and DarkBERT. Such widespread availability of AI generative tools has increased the potential of AI phishing attacks across the globe.

The Rise of Next-gen Cybercrime Chatbots

Threat actors are training chatbots using data from the dark web and sophisticated language models designed to combat cybercrime. DarkBART, one of these malicious chatbots, stands out as a “dark version” of Google’s conversational AI, Bard. Exploring the activities of CanadianKingpin12, the threat actor behind such malicious AI bots, cybersecurity experts have discovered that the development is highly concerning.

The focus of CanadianKingpin12 lies beyond FraudGPT and DarkBART. Online adversaries also have access to DarkBERT, another language model originally trained on dark web data by South Korean researchers to fight cybercrime. The corrupted version of this bot used by the attackers outperforms others and comes loaded with capabilities like carrying out sophisticated phishing attacks.

Besides, the tool has been designed to execute advanced social engineering attacks and exploit system vulnerabilities. These advanced systems can distribute malware and leverage zero-day vulnerabilities for financial gain.

The Convergence of AI and Cybercrime

With the rise of generative AI tools, the cybersecurity landscape is facing an alarming trend. Malicious actors are exploiting AI chatbots, streamlining malware attacks, and escalating AI phishing threats worldwide. From the emergence of dangerous clones like WormGPT and FraudGPT to the development of menacing bots like DarkBART and DarkBERT, the potential for devastating cyber attacks is increasing.

As threats powered by artificial intelligence evolve, the conflict between cybersecurity experts and harmful actors intensifies. This escalating situation calls for innovative defenses, including anti-phishing measures, and collaborative efforts to ensure robust protection. With the implementation of such strategies, we can keep pace with the rapidly changing cybersecurity landscape.

A Looming Cybersecurity Threat: The Future of Cybercrime and Artificial Intelligence

Integrating live internet access and Google Lens image processing features makes phishing scams and malware attacks even more menacing. The growing trend of AI chatbot exploitation is alarming. Besides, minor threat actors are now accessing these advanced tools, which makes them a significant threat to victims.

With the rapid development of FraudGPT and DarkBART, experts predict a challenging future in the global cybersecurity landscape. For malicious actors, easy access to such advanced tools paves the way to more sophisticated and extensive attacks.

Defending Against AI Phishing

Countering AI phishing attacks calls for a proactive approach. Static security measures may prove inadequate against evasive chatbots. ML algorithms empower malicious tools to learn from interactions, adapt strategies, and evade detection systems. That makes them formidable opponents in the world of cybersecurity.

Cybersecurity experts must emphasize exploring AI-based defensive measures to stay ahead in the race. The implementation of AI-powered cybersecurity solutions, which include mechanisms for phishing protection, will serve as a practical approach to identifying and neutralizing new and unidentified cyber threats. This strategy will support organizations in maintaining a strong defensive stance in the face of evolving cyber risks.

The Evolving Arms Race: Cybersecurity vs. Malicious AI

As the deployment of AI-powered chatbots for cybercrime gains momentum, cybersecurity professionals will be engaged in an escalated arms race against these malicious players. With the advent of evasive AI chatbots, traditional security systems will be rendered powerless. Therefore, cybersecurity experts must adapt strategies to develop effective countermeasures to handle these emerging threats.

Countering AI phishing attacks calls for a proactive approach. Static security measures may prove inadequate against evasive chatbots. ML algorithms empower malicious tools to learn from interactions, adapt strategies, and evade detection systems. That makes them formidable opponents in the world of cybersecurity.

Cybersecurity experts must emphasize exploring AI-based defensive measures to stay ahead in the race. They need to leverage AI and ML to identify patterns, anomalies, and potential threats more accurately and quickly. The use of AI-powered cybersecurity solutions will be an effective way to detect and mitigate new and unknown cyber threats and help organizations maintain a robust defensive posture.

Cybersecurity experts, researchers, and organizations must closely coordinate to counter emerging AI-based cyber threats. Sharing threat intelligence and expertise can also help identify malicious AI chatbot campaigns. That will help experts develop effective countermeasures. Besides, government agencies, private industry, and academia must cooperate to promote the responsible use of AI technology. That should address the emerging challenges that threat actors pose.

Uniting Against AI Threats

Coordination among experts counters AI-based cyber threats. Shared threat intelligence identifies malicious campaigns. Government, industry, and academia cooperation is vital for responsible AI use.

Final Words

Digital security is currently under a gnawing threat with the convergence of AI and cybercrime. Given the advanced phishing attacks and other threat vectors, robust cybersecurity measures are the need of the hour.

The battle between malicious actors and security experts will intensify as technology advances. Therefore, the innovation of adequate safeguards, vigilance, and collaborative measures will be crucial in securing the digital world from potential threat actors.