AI-backed voice cloning may lead to vishing attacks!

A group of researchers believes that AI-based voice impersonation can enhance social engineering tactics and make them sound more perfect and convincing. AI voice cloning has the ability to minimize the difference between reality and artificiality. Threat actors can misuse this AI-backed voice cloning technology to target organizations, their employees, and the general public.

The researchers have used an AI-generated voice cloning clip to prove their point.

What is a vishing attack?

A vishing attack is basically a type of cyberattack where the threat actor makes highly convincing and well-calculated voice calls to convince the victims into sharing sensitive details such as passwords, bank account numbers, social security numbers, and so on.

A cybercrook may connect with you on a call and pretend to be one of your closest friends or family members. The next thing they will do is to create a sense of panic and urgency so that you part with personal data or money.

Vishing attacks are already on the rise, with multiple incidents reported this year. Back in May, the notorious 3 AM ransomware gang blended vishing with email bombing to target the victims.

Salesforce employees were targeted in June, when the ransomware gang pretended to be IT support team members. Something similar happened with Cisco, as a cybercrook managed to carry out a vishing attack and gain access to Cisco’s sensitive data.

Previously, during vishing attacks, cybercriminals either attempted to impersonate someone without actually sounding like them or played pre-recorded audio clips. In both these cases, there was room for suspicion. Some scammers also used text-to-speech systems. But that would sound unnatural and robotic to the victims, or could lead to delayed replies.

This is why AI-backed voice cloning technology is incredibly popular among threat actors—the element of flawlessness it adds to vishing attacks.

What is AI voice cloning technology?

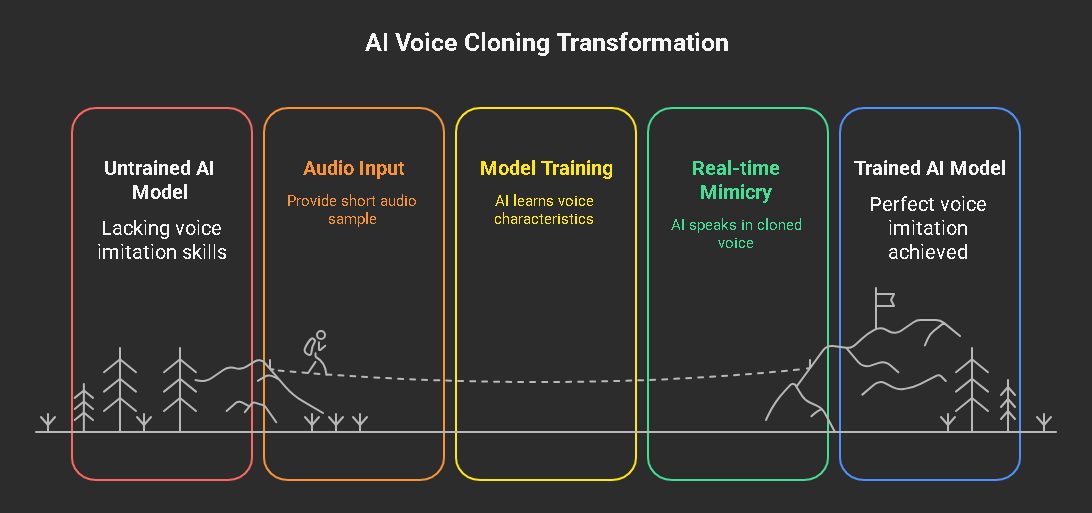

Artificial Intelligence-backed voice impersonation technique refers to the process of training AI models to imitate a given voice. With AI technology, it is now possible to imitate a specific voice with even a short recorded audio clip. AI voice cloning enables cybercriminals to speak in real-time, responding naturally during phone conversations. It is also possible to use a pre-written text and deliver it flawlessly in the cloned voice. The degree of perfection tricks the victims into believing that they are talking to someone they already know well.

The NCC group researchers trained an AI voice cloning tool by using a publicly available, short audio clip. Within an hour or so, they managed to develop a vishing system that could mimic any voice in real-time

Here’s what makes the situation all the more scary! The researchers did not use any high-end, state-of-the-art equipment or machinery. They created the AI voice cloning tool using readily available and affordable tools and equipment.

What does your future look like with AI voice cloning on the rise?

Researchers and cybersecurity experts warn that AI voice impersonation could significantly escalate cyberattacks and social engineering schemes in the near future. For example, cybercriminals may soon clone the voices of famous public figures and celebrities to execute large-scale scams.

Additionally, sophisticated attackers could exploit AI voice cloning to bypass enterprise security systems by mimicking the voices of key decision-makers such as CEOs, founders, and directors. This emerging threat underscores the critical need for advanced phishing protection and robust security measures to safeguard organizations against AI-driven attacks.

Besides, the degree of feasibility of AI voice cloning tools and the easy learning curve can attract a huge wave of noob threat actors.

Even though threat actors are still majorly using conventional vishing tactics, they will soon migrate to AI-backed voice cloning, making it difficult for victims to detect any foul play.

How to prepare for an upsurge in vishing attacks?

First things first! You can no longer trust your ears. Even if the voice on the other side sounds exactly like your closest friend or family member, it is highly likely that you are talking to a cunning, AI-savvy cyber scammer.

Next, you must put an immediate limitation on the public availability of voice clips of your executives. You must closely monitor things like podcasts, speeches, and recorded meetings and put a limit on their easy accessibility.

Also, setting up a pre-agreed code word can be a brilliant move to tackle such malicious vishing attempts. You should also deploy the Multi-Factor Authentication technique to add an extra layer of protection. Lastly, organize proper employee training to help them identify vishing attacks.

As AI approaches perfection, one day at a time, it is essential to remain skeptical. If the person on the call wants you to share your personal details or requests that you send money urgently, it is better to hang up, pause, and think clearly before responding.

Next time your senior calls you asking for your account details or a close friend calls you and asks for immediate monetary help, ask yourself— can that be AI?